interactive 3d modelling in outdoor augmented reality

worlds

Research Thesis for the Degree of Doctor of Philosophy

By Wayne Piekarski

Bachelor of Engineering in Computer Systems

Engineering (Hons), University of South Australia

wayne@cs.unisa.edu.au

Supervisor

Dr. Bruce Thomas

Adelaide, South Australia

February 2004

|

Wearable Computer

Lab

School of Computer and Information Science

Division of

Information Technology, Engineering, and the Environment

The University of South Australia

|

|

Table of Contents

Chapter 1 - Introduction............................................................................................................ 1

1.1 Problem statement............................................................................................................ 4

1.2 Thesis statement............................................................................................................... 6

1.3 Contributions................................................................................................................... 7

1.4 Dissertation structure....................................................................................................... 8

Chapter 2 - Background........................................................................................................... 10

2.1 Definition of augmented reality..................................................................................... 10

2.2 Applications................................................................................................................... 12

2.3 See through display technology..................................................................................... 20

2.4 3D tracking technology.................................................................................................. 25

2.5 Desktop direct manipulation techniques........................................................................ 38

2.6 Virtual reality interaction techniques............................................................................. 39

2.7 Physical world capture techniques................................................................................. 51

2.8 CAD modelling.............................................................................................................. 55

2.9 Outdoor augmented reality wearable

computers........................................................... 60

2.10 Summary...................................................................................................................... 64

Chapter 3 - Augmented reality working planes....................................................................... 65

3.1 Distance estimation cues................................................................................................ 66

3.2 AR working planes definition........................................................................................ 70

3.3 Coordinate systems........................................................................................................ 72

3.4 Plane creation................................................................................................................. 76

3.5 Object manipulation....................................................................................................... 80

3.6 Vertex placement........................................................................................................... 82

3.7 Accurate alignment with objects.................................................................................... 82

3.8 Alignment accuracy using HMDs.................................................................................. 83

3.9 Summary........................................................................................................................ 89

Chapter 4 - Construction at a distance..................................................................................... 90

4.1 Technique features......................................................................................................... 90

4.2 Direct object placement techniques............................................................................... 95

4.3 Body-relative plane techniques...................................................................................... 97

4.4 AR working planes techniques.................................................................................... 103

4.5 Integrated example...................................................................................................... 110

4.6 Operational performance.............................................................................................. 111

4.7 Summary...................................................................................................................... 112

Chapter 5 - User interface...................................................................................................... 114

5.1 Design rationale........................................................................................................... 114

5.2 Cursor operations......................................................................................................... 117

5.3 Command entry........................................................................................................... 123

5.4 Display interface.......................................................................................................... 127

5.5 Tinmith-Metro modelling application.......................................................................... 132

5.6 Informal user evaluations............................................................................................. 138

5.7 Future work.................................................................................................................. 142

5.8 Summary...................................................................................................................... 143

Chapter 6 - Software architecture.......................................................................................... 144

6.1 Design overview.......................................................................................................... 145

6.2 Previous work.............................................................................................................. 147

6.3 Object design............................................................................................................... 152

6.4 Object storage.............................................................................................................. 156

6.5 Implementation internals.............................................................................................. 161

6.6 Sensors and events....................................................................................................... 168

6.7 Rendering..................................................................................................................... 172

6.8 Demonstrations............................................................................................................ 174

6.9 Summary...................................................................................................................... 181

Chapter 7 - Hardware............................................................................................................ 182

7.1 Hardware inventory..................................................................................................... 182

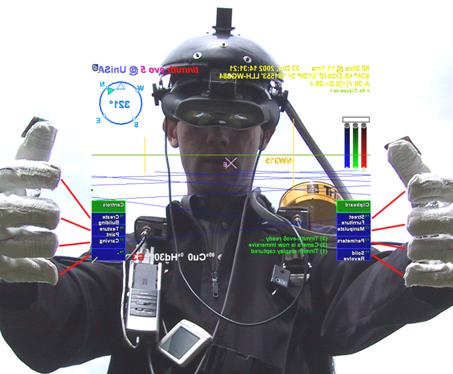

7.2 Tinmith-Endeavour backpack...................................................................................... 184

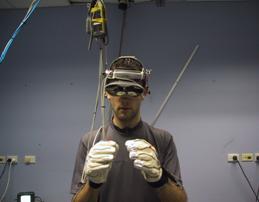

7.3 Glove input device....................................................................................................... 193

7.4 Summary...................................................................................................................... 200

Chapter 8 - Conclusion.......................................................................................................... 201

8.1 Augmented reality working planes.............................................................................. 201

8.2 Construction at a distance............................................................................................ 202

8.3 User interfaces............................................................................................................. 203

8.4 Vision-based hand tracking......................................................................................... 203

8.5 Modelling applications................................................................................................. 204

8.6 Software architecture................................................................................................... 204

8.7 Mobile hardware.......................................................................................................... 204

8.8 Future work.................................................................................................................. 205

8.9 Final remarks................................................................................................................ 207

Appendix A - Evolutions....................................................................................................... 208

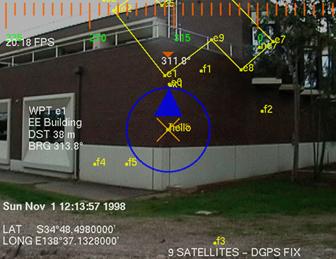

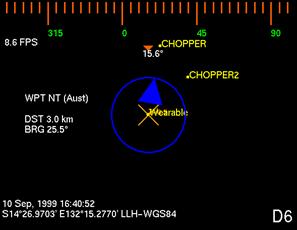

A.1 Map-in-the-Hat (1998)................................................................................................ 208

A.2 Tinmith-2 prototype (1998)......................................................................................... 210

A.3 Tinmith-3 prototype (1999)......................................................................................... 213

A.4 Tinmith-4 and ARQuake prototype (1999)................................................................ 217

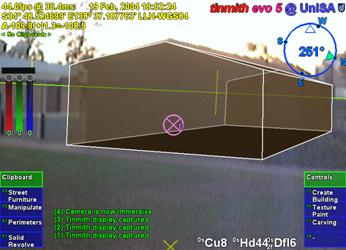

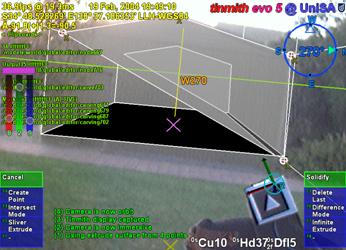

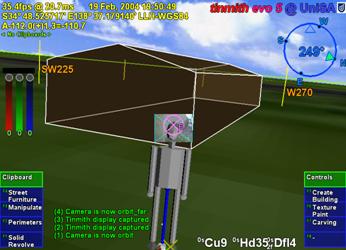

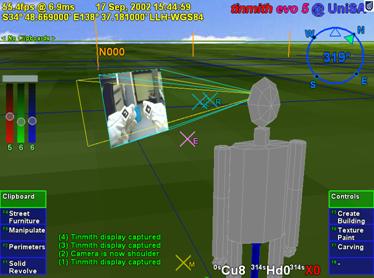

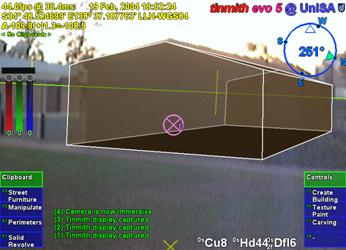

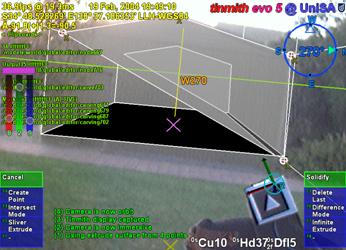

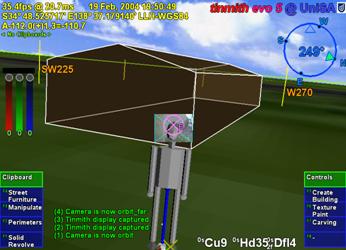

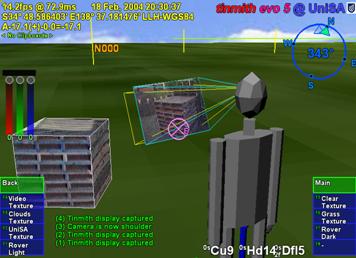

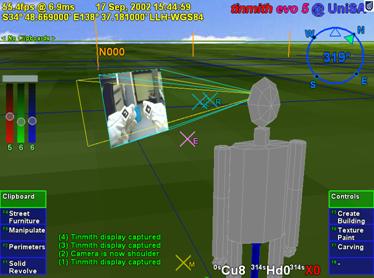

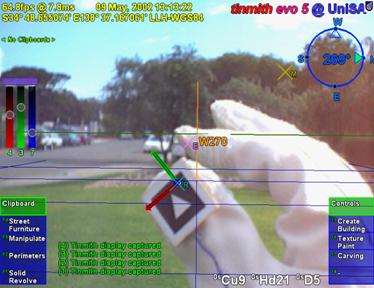

A.5 Tinmith-evo5 prototype one (2001)............................................................................ 218

A.6 Tinmith-VR prototype (2001)..................................................................................... 221

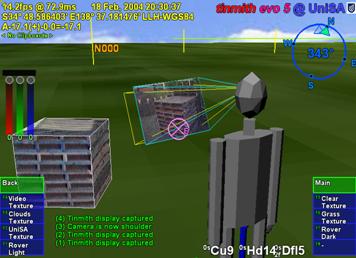

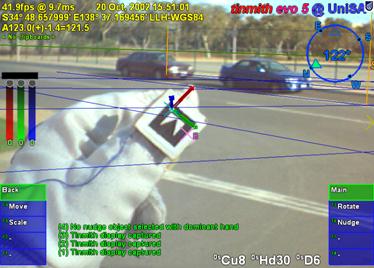

A.7 Tinmith-evo5 prototype two with

Tinmith-Endeavour (2002)................................... 222

A.8 ARQuake prototype two with

Tinmith-Endeavour (2002)........................................ 224

A.9 Summary..................................................................................................................... 226

Appendix B - Attachments.................................................................................................... 227

B.1 CD-ROM.................................................................................................................... 227

B.2 Internet........................................................................................................................ 228

References.............................................................................................................................. 229

List of Figures

Figure 1‑1.... Example

Sony Glasstron HMD with video camera and head tracker.................. 2

Figure 1‑2.... Example

of outdoor augmented reality with computer-generated furniture........ 3

Figure 1‑3.... Schematic

of augmented reality implementation using a HMD........................... 3

Figure 2‑1.... Example

of Milgram and Kishinoís reality-virtuality continuum....................... 11

Figure 2‑2.... The

first head mounted display, developed by Ivan Sutherland in 1968........... 13

Figure 2‑3.... External

and AR immersive views of a laser printer maintenance application... 13

Figure 2‑4.... Virtual

information windows overlaid onto the physical world......................... 14

Figure 2‑5.... Worker

using an AR system to assist with wire looming in aircraft assembly... 15

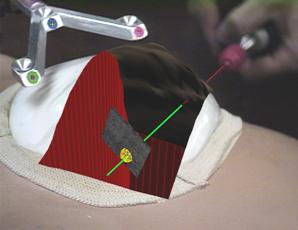

Figure 2‑6.... AR

with overlaid ultrasound data guiding doctors during needle biopsies....... 15

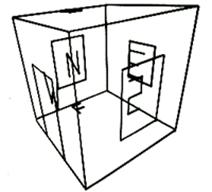

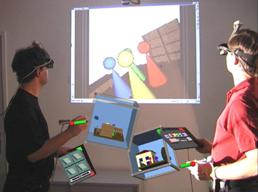

Figure 2‑7.... Studierstube

AR environment, with hand-held tablets and widgets................. 16

Figure 2‑8.... Marker

held in the hand provides a tangible interface for viewing 3D objects.. 16

Figure 2‑9.... Actors

captured as 3D models from multiple cameras overlaid onto a marker.. 17

Figure 2‑10.. Touring

Machine system overlays AR information in outdoor environments.... 18

Figure 2‑11.. BARS

system used to reduce the detail of AR overlays presented to the user. 18

Figure 2‑12.. Context

Compass provides navigational instructions via AR overlays.............. 19

Figure 2‑13.. Schematic

of optical overlay-based augmented reality...................................... 22

Figure 2‑14.. Optically

combined AR captured with a camera from inside the HMD............ 23

Figure 2‑15.. Schematic

of video overlay-based augmented reality........................................ 23

Figure 2‑16.. Example

video overlay AR image, captured directly from software................. 25

Figure 2‑17.. Precision

Navigation TCM2 and InterSense InertiaCube2 tracking devices..... 37

Figure 2‑18.. CDS

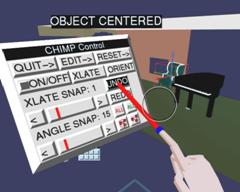

system with pull down menus and creation of vertices to extrude solids. 43

Figure 2‑19.. CHIMP

system with hand-held widgets, object selection, and manipulation... 43

Figure 2‑20.. Immersive

and external views of the SmartScene 3D modelling environment.. 44

Figure 2‑21.. Partial

UniSA campus model captured using manual measuring techniques..... 53

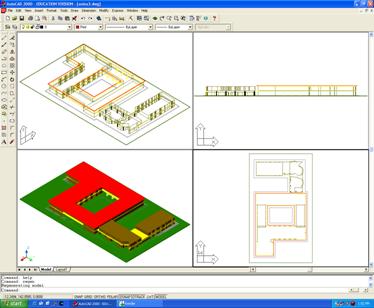

Figure 2‑22.. Screen

capture of Autodeskís AutoCAD editing a sample 3D model............... 56

Figure 2‑23.. Venn

diagrams demonstrating Boolean set operations on 2D areas A and B.... 58

Figure 2‑24.. CSG

operations expressed as Boolean sets of 3D objects.................................. 58

Figure 2‑25.. Plane

equation divides the universe into two half spaces, inside and outside... 59

Figure 2‑26.. Finite

cylinder defined by intersecting an infinite cylinder with two planes..... 59

Figure 2‑27.. Box

defined using six plane equations and CSG intersection operator............. 60

Figure 2‑28.. Wearable

input devices suitable for use in outdoor environments..................... 62

Figure 3‑1.... 3D

objects are projected onto a plane near the eye to form a 2D image............ 66

Figure 3‑2.... Normalised

effectiveness of various depth perception cues over distance........ 69

Figure 3‑3.... Graph

of the size in pixels of a 1m object on a HMD plane 1m from the eye... 70

Figure 3‑4.... Coordinate

systems used for the placement of objects at or near a human........ 72

Figure 3‑5.... World-relative

AR working planes remain fixed during user movement........... 73

Figure 3‑6.... Location-relative

AR working planes remain at the same bearing from the user and maintain a

constant distance from the user......................................................................................... 74

Figure 3‑7.... Body-relative

AR working planes remain at a fixed orientation and distance to the hips and

are not modified by motion of the head......................................................................... 75

Figure 3‑8.... Head-relative

AR working planes remain attached to the head during all movement, maintaining

the same orientation and distance to the head.......................................................... 76

Figure 3‑9.... AR

working plane created along the head viewing direction of the user.......... 77

Figure 3‑10.. AR

working plane created at a fixed offset and with surface normal matching the

view direction of the user...................................................................................................................... 77

Figure 3‑11.. AR

working plane created at intersection of cursor with object, and normal

matching the userís view direction.............................................................................................................. 78

Figure 3‑12.. AR

working plane created relative to an objectís surface.................................. 78

Figure 3‑13.. AR

working plane created at a nominated object based on the surface normal of

another reference object.................................................................................................................. 79

Figure 3‑14.. Manipulation

of an object along an AR working plane surface......................... 79

Figure 3‑15.. Depth

translation from the user moving a head-relative AR working plane...... 79

Figure 3‑16.. AR

working plane attached to the head can move objects with user motion.... 79

Figure 3‑17.. Scaling

of an object along an AR working plane with origin and two points.... 80

Figure 3‑18.. Rotation

of an object along AR working plane with origin and two points...... 80

Figure 3‑19.. Vertices

are created by projecting the 2D cursor against an AR working plane 81

Figure 3‑20.. AR

working plane attached to the head can create vertices near the user......... 81

Figure 3‑21.. Example

fishing spot marked using various shore-based landmarks.................. 83

Figure 3‑22.. Example

of range lights in use to indicate location-relative to a transit bearing 83

Figure 3‑23.. Sony

Glasstron HMD measured parameters and size of individual pixels......... 84

Figure 3‑24.. Distant

landmarks must be a minimum size to be visible on a HMD................. 85

Figure 3‑25.. Dotted

lines indicate the angle required to separate the two markerís outlines. 85

Figure 3‑26.. Similar

triangles used to calculate final positioning error function..................... 86

Figure 3‑27.. Rearrangement

and simplification of final positioning error equation............... 87

Figure 3‑28.. Derivation

of alignment equation when marker B is at an infinite distance....... 87

Figure 3‑29.. 3D

surface plot with marker distances achieving alignment accuracy of 2 cm.. 88

Figure 3‑30.. 3D

surface plot with marker distances achieving alignment accuracy of 50 cm 89

Figure 4‑1.... AR

view of virtual table placed in alignment with physical world table........... 95

Figure 4‑2.... VR

view of bread crumbs markers defining a flat concave perimeter............... 96

Figure 4‑3.... AR

view showing registration of perimeter to a physical world grassy patch... 96

Figure 4‑4.... Example

bread crumbs model extruded to form an unbounded solid shape..... 97

Figure 4‑5.... Infinite

carving planes used to create a convex shape from an infinite solid..... 98

Figure 4‑6.... Orientation

invariant planes generated using multiple marker positions.......... 100

Figure 4‑7.... Relationship

between GPS accuracy and required distance to achieve better than 1 degree of

orientation error for two different GPS types.................................................. 100

Figure 4‑8.... Orientation

invariant planes formed using first specified angle and markers... 101

Figure 4‑9.... Box

objects can be moved into a building surface to carve out windows....... 102

Figure 4‑10.. Convex

trapezoid and concave T, L, and O-shaped objects............................ 103

Figure 4‑11.. Concave

object created using CSG difference of two convex boxes.............. 103

Figure 4‑12.. AR

working planes are used to specify vertices and are projected along the surface

normal for carving the objectís roof................................................................................................ 104

Figure 4‑13.. AR

view of infinite planes building created with sloped roof......................... 105

Figure 4‑14.. AR

view of infinite planes building being interactively carved with a roof.... 105

Figure 4‑15.. VR

view of building with sloped roof, showing overall geometry.................. 105

Figure 4‑16.. Frames

of automobile carving, with markers placed at each corner................. 106

Figure 4‑17.. Final

resulting automobile shown overlaid in AR view, and in a VR view..... 106

Figure 4‑18.. Schematic

illustrating the painting of a window onto a wall surface............... 107

Figure 4‑19.. Examples

showing surface of revolution points for tree and cylinder objects. 107

Figure 4‑20.. AR

view of surface of revolution tree with markers on AR working plane..... 108

Figure 4‑21.. VR

view of final surface of revolution tree as a solid shape............................ 108

Figure 4‑22.. Outdoor

stack of pallets approximating a box, before modelling.................... 109

Figure 4‑23.. VR

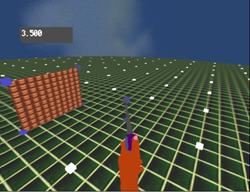

view of final model with captured geometry and mapped textures.......... 109

Figure 4‑24.. AR

view of final abstract model, including street furniture items................... 111

Figure 4‑25.. VR

view of final abstract model, including street furniture items................... 111

Figure 5‑1.... Each

finger maps to a displayed menu option, the user selects one by pressing the

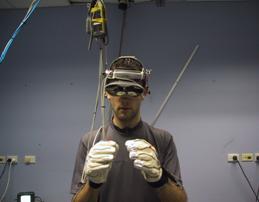

appropriate finger against the thumb.............................................................................................. 117

Figure 5‑2.... Immersive

AR view, showing gloves and fiducial markers, with overlaid modelling cursor

for selection, manipulation, and creation................................................................................ 118

Figure 5‑3.... Translation

operation applied to a virtual tree with the userís hands............... 120

Figure 5‑4.... Scale

operation applied to a virtual tree with the userís hands......................... 121

Figure 5‑5.... Rotate

operation applied to a virtual tree with the userís hands...................... 122

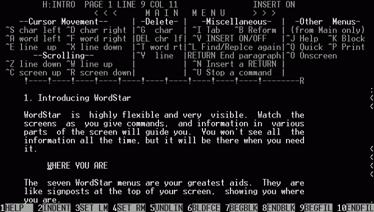

Figure 5‑6.... Original

WordStar application, showing menu toolbar at bottom of screen.... 124

Figure 5‑7.... Immersive

AR overlay display components explained..................................... 128

Figure 5‑8.... Top

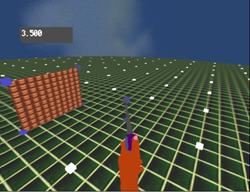

down aerial view of VR environment in heading up and north up mode. 129

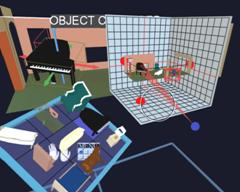

Figure 5‑9.... Orbital

view centred on the user with a VR style display................................ 129

Figure 5‑10.. User,

view plane, 3D world objects, and distant projection texture map......... 131

Figure 5‑11.. Immersive

view of Tinmith-Metro with 3D cursor objects appearing to be floating over the

incoming video image....................................................................................................... 132

Figure 5‑12.. External

view of Tinmith-Metro with userís body and 3D environment......... 132

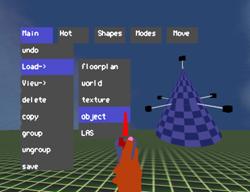

Figure 5‑13.. Options

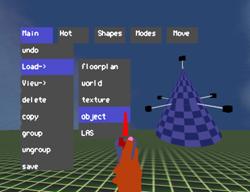

available from the top-level of Tinmith-Metroís command menu...... 133

Figure 5‑14.. Menu

hierarchy of available options for the Tinmith-Metro application.......... 134

Figure 5‑15.. Original

horizontal menu design in immersive AR view.................................. 139

Figure 5‑16.. View

of the user interface being tested in a VR immersive environment........ 140

Figure 6‑1.... Overall

architecture showing sensors being processed using libraries and application

components, and then rendered to the userís HMD..................................................................... 146

Figure 6‑2.... Layers

of libraries forming categories of objects available to process data...... 153

Figure 6‑3.... Data

values flow into a node for processing, producing output values............ 153

Figure 6‑4.... Expanded

view of data flow model showing stages of processing................. 154

Figure 6‑5.... Network

distribution is implemented transparently using automatically generated

serialisation callbacks and a network transmission interface............................................................... 156

Figure 6‑6.... Examples

demonstrating usage of the hierarchical object store....................... 158

Figure 6‑7.... Simplified

layout of composite Position class, showing nested objects........... 160

Figure 6‑8.... Edited

extract from the is-300.h orientation tracker C++ definition file......... 162

Figure 6‑9.... Complete

XML serialisation of the IS-300 orientation tracker object............. 163

Figure 6‑10.. C++

code demonstrating setup and execution of callbacks............................. 164

Figure 6‑11.. Mathematical

operations possible between absolute and relative objects........ 169

Figure 6‑12.. Distorted

view of Tinmith-Metro showing improperly placed avatar objects when the

resolution of OpenGLís internal values is exceeded............................................................. 170

Figure 6‑13.. User

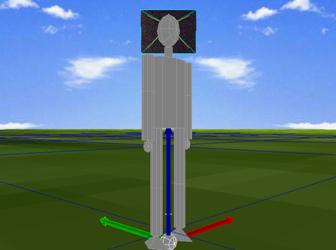

is represented in the 3D world with a hierarchical avatar model............. 176

Figure 6‑14.. Indoor

tracking system with backpack, head and shoulder mounted video cameras, GPS

antenna, and fiducial markers on the hands, walls and ceiling.............................................. 177

Figure 6‑15.. Partial

layout of manipulation menu, with internal commands and next path. 179

Figure 7‑1.... Data

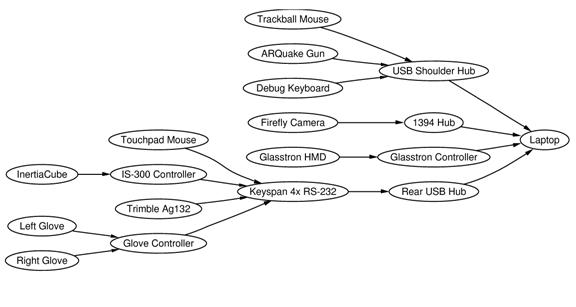

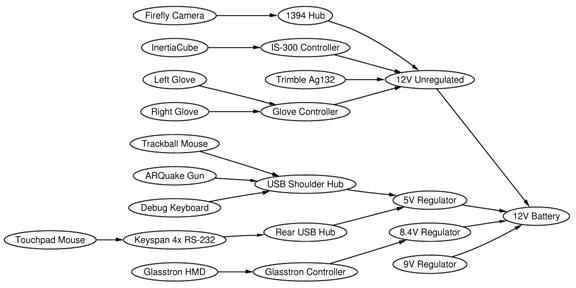

bus interconnect diagram of components used for mobile outdoor AR.. 184

Figure 7‑2.... Rear

view of previous backpack design, showing tangled mess of cabling..... 185

Figure 7‑3.... Front

and rear views of the Tinmith-Endeavour backpack in use outdoors..... 185

Figure 7‑4.... Design

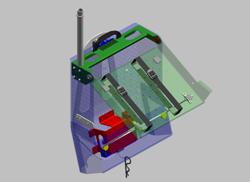

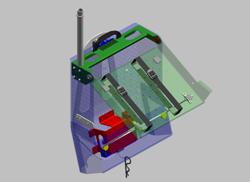

of polycarbonate housing with hinged laptop holder and internals..... 187

Figure 7‑5.... Backpack

shown in desktop configuration, permitting normal use outside..... 187

Figure 7‑6.... Interior

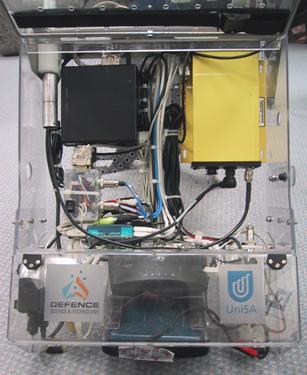

of backpack housing, showing internal components and cabling........ 188

Figure 7‑7.... Power

supply breakout box, with +5V, +9V, and +12V at each connector.... 189

Figure 7‑8.... Power

bus interconnect diagram of components used for mobile outdoor AR 190

Figure 7‑9.... Two

USB ports, glove connector, and cables mounted onto shoulder straps.. 190

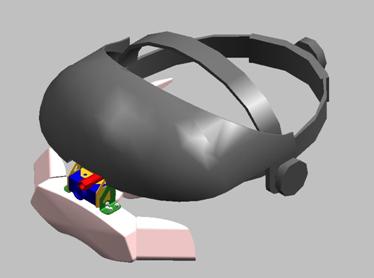

Figure 7‑10.. Design

of brackets to attach Sony Glasstron and Firefly camera to a helmet.. 191

Figure 7‑11.. Designs

of various versions of the glove and attached fiducial markers.......... 194

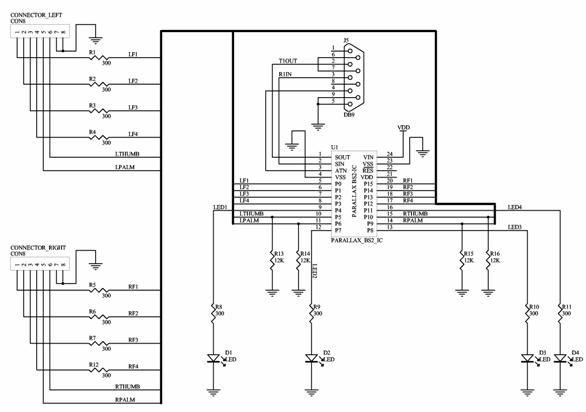

Figure 7‑12.. Circuit

schematic for the low power glove controller....................................... 195

Figure 7‑13.. Example

use of the fiducial marker tracking used for a 3D cursor.................. 196

Figure 7‑14.. ARToolKit

default camera_para.dat file, with error x=2.5, y=48.0................. 198

Figure 7‑15.. Graphical

depictions showing original and new orthogonal camera model..... 199

Figure A‑1... Phoenix-II

wearable computer with batteries, cables, and belt mounting........ 209

Figure A‑2... Map-in-the-Hat

prototype inside ruck sack, with antenna, cables, and HMD. 209

Figure A‑3... Screen

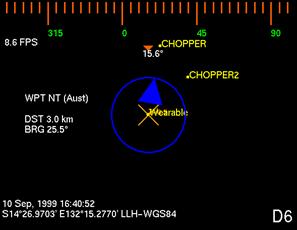

shots of Map-in-the-Hat indicating a waypoint on the display............ 209

Figure A‑4... Tinmith-2

hiking frame with some equipment attached................................... 211

Figure A‑5... 2D

top down map overlaid on physical world (using offline AR overlay)...... 212

Figure A‑6... 2D

top down map overlay with current location relative to nearby buildings. 212

Figure A‑7... 3D

wireframe overlay of building, with small extension made to the left....... 213

Figure A‑8... Tinmith-3

backpack with HMD, head tracker, and forearm keyboard............ 214

Figure A‑9... Software

interconnect diagram for Tinmith-2 to Tinmith-4 prototypes........... 215

Figure A‑10. View

of ModSAF tool with simulated entities and a wearable user................ 215

Figure A‑11. Wearable

user in outdoor environment generates DIS packets........................ 216

Figure A‑12. MetaVR

view of avatar for wearable user and helicopter for ModSAF entity 216

Figure A‑13. DIS

entities overlaid in yellow on HMD with a top down view..................... 216

Figure A‑14. Visualising

artificial CAD building extensions overlaid on physical world..... 217

Figure A‑15. ARQuake

implemented using optical AR with virtual monsters shown.......... 218

Figure A‑16. Mock

up demonstrating how a modelling system could be used outdoors...... 219

Figure A‑17. Side

view of original Tinmith-evo5 backpack, with cabling problems............. 220

Figure A‑18. Close

up view of messy cable bundles and miscellaneous input devices......... 220

Figure A‑19. Screen

shots of the first Tinmith-Metro release in use outdoors...................... 221

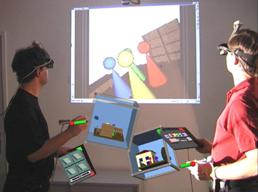

Figure A‑20 VR immersive system used to

control the Tinmith-Metro user interface.......... 222

Figure A‑21. Side

and front views of the Tinmith-Endeavour backpack in use outdoors.... 223

Figure A‑22. Screen

capture of the latest Tinmith-Metro release in use outdoors................. 224

Figure A‑23. USB

mouse embedded into a childrenís bubble blowing toy.......................... 225

Figure A‑24. Monsters

overlaid on the physical world with video overlay ARQuake.......... 225

List of Tables

Table 2‑1..... Comparison

between optical and video combined AR systems. 25

Table 2‑2..... Comparison

between various types of 3D tracking technology. 38

Table 2‑3..... Comparison

between forms of VR interaction techniques. 51

Table 3‑1..... Alignment

accuracies for markers at various distances from the user 86

Table 4‑1..... Top down view

of building shapes with vertices (v), edges (e), and facets (f) 94

Table 6‑1..... Approximate

round trip delays experienced for network serialisation. 166

Table 7‑1..... Current

backpack components with cost, location, and power consumption. 183

Abbreviations and Definitions

1394†††††††††††† IEEE Standard 1394,

also referred to as Firewire or i.Link [IEEE95]

2D††††††††††††††† Two Dimensional in XY

3D††††††††††††††† Three Dimensional in

XYZ

AAAD†††††††† Action at a distance,

first defined by Mine [MINE95a]

ACRC††††††††† Advanced Computing

Research Centre at UniSA

AGD66††††††† Australian Geodetic Datum

1966 [ICSM00]

AGD84††††††† Australian Geodetic Datum

1984 [ICSM00]

AR††††††††††††††† Augmented Reality

CIS†††††††††††††† School of Computer and Information Science at UniSA

COTS†††††††††† Commercial Off The Shelf

CRT††††††††††††† Cathode Ray Tube

(technology used in television and monitor displays)

CSG†††††††††††† Constructive Solid

Geometry

DGPS†††††††††† Differential GPS

DIS†††††††††††††† IEEE Standard 1278,

the Distributed Interactive Simulation protocol [IEEE93]

DOF†††††††††††† Degrees of Freedom ([X,

Y, Z] for position, [q, f, j] for orientation)

3DOF†††††††††† Three degrees of

freedom, only three measurements, such as only orientation or position tracker

information

6DOF†††††††††† Six degrees of freedom,

information about orientation and position, a complete tracking solution

DSTO†††††††††† Defence Science

Technology Organisation, Adelaide, South Australia

ECEF†††††††††† Earth-Centred

Earth-Fixed Cartesian coordinates, in metres [ICSM00]

Evo†††††††††††††† Evolution or version

number

FOV†††††††††††† Field of View, the

angle of the userís view that a head mounted display or camera can cover

GLONASS† Russian Federation, Global

Navigation Satellite System (Global'naya Navigatsionnaya Sputnikovaya Sistema

in Russian)

GPS††††††††††††† US Department of Defence, Global Positioning System

HMD††††††††††† Head Mounted Display

HUD††††††††††† Heads Up Display

ITD†††††††††††††† Information

Technology Division (located at DSTO Salisbury, Adelaide)

IPC†††††††††††††† Inter-Process

Communication

LCD†††††††††††† Liquid Crystal Display

LOD†††††††††††† Land Operations

Division (located at DSTO Salisbury, Adelaide)

LLH†††††††††††† Latitude Longitude

Height spherical polar coordinates [ICSM00]

LSAP†††††††††† Land Situational

Awareness Picture System

MR†††††††††††††† Mixed Reality

NFS††††††††††††† Sun Microsystemsí

Network File System [SAND85]

OEM††††††††††† Original Equipment

Manufacturer

RPC††††††††††††† Sun Microsystemsí

Remote Procedure Calls

RTK†††††††††††† Real-Time Kinematic

(centimetre grade GPS technology)

SERF††††††††††† Synthetic Environment

Research Facility (located at DSTO Salisbury, Adelaide)

SES††††††††††††† Scientific and

Engineering Services (located at DSTO Salisbury, Adelaide)

STL††††††††††††† Standard Template

Library (for the C++ language)

SQL††††††††††††† Server Query Language

Tinmith†††††††† This Is Not Map In The

Hat (named for historical purposes)

UniSA††††††††† University of South Australia

USB†††††††††††† Universal Serial Bus

UTM†††††††††††† Universal Transverse

Mercator grid coordinates, in metres [ICSM00]

VE††††††††††††††† Virtual Environment

VR††††††††††††††† Virtual Reality

WCL††††††††††† Wearable Computer Lab at

the University of South Australia

WIM†††††††††††† Worlds in Miniature [STOA95]

WIMP††††††††† Windows, Icons, Menus,

and Pointer

WGS84††††††† World Geodetic System 1984

[ICSM00]

X††††††††††††††††† The X Window System

XML††††††††††† Extensible Mark-up

Language

Summary

This

dissertation presents interaction techniques for 3D modelling of large

structures in outdoor augmented reality environments. Augmented reality is the

process of registering projected computer-generated images over a userís view

of the physical world. With the use of a mobile computer, augmented reality can

also be experienced in an outdoor environment. Working in a mobile outdoor

environment introduces new challenges not previously encountered indoors,

requiring the development of new user interfaces to interact with the computer.

Current AR systems only support limited interactions and so the complexity of

applications that can be developed is also limited.

This

dissertation describes a number of novel contributions that improve the state

of the art in augmented reality technology. Firstly, the augmented reality

working planes technique gives the user the ability to create and edit objects

at large distances using line of sight and projection techniques. This

technique overcomes limitations in a humanís ability to perceive depth, and

requires simple input devices that are available on mobile computers. A number

of techniques that leverage AR working planes are developed, collectively

termed construction at a distance: street furniture, bread crumbs, infinite

planes, projection carving, projection colouring, surface of revolution, and

texture map capture. These techniques can be used to create and capture the

geometry of outdoor shapes using a mobile AR system with real-time verification

and iterative refinement. To provide an interface for these techniques, a novel

AR user interface with cursors and menus was developed. This user interface is

based around a pair of pinch gloves for command input, and the use of a custom

developed vision tracking system for use in a mobile environment. To develop

applications implementing these contributions, a new software architecture was

designed to provide a suitable abstraction to make development easier. This

architecture is based on an object-oriented data flow approach, uses a special

file system notation object repository, and supports distributed objects. The

software requires a platform to execute on, and so a custom wearable hardware

platform was developed. The hardware is based around a backpack that contains

all the equipment required, and uses a novel flexible design that supports

simple reconfiguration.

Based on these

contributions, a number of modelling applications were developed to demonstrate

the usefulness of these techniques. These modelling applications allow users to

walk around freely outside, and use proprioception and interactions with the hands

to control the task. Construction at a distance allows the user to model

objects such as buildings, trees, automobiles, and ground features with minimal

effort in real-time, and at any scale and distance beyond the userís reach.

These applications have been demonstrated in the field to verify that the

techniques can perform as claimed in the dissertation.

Declaration

I declare that this thesis does not

incorporate without acknowledgment any material previously submitted for a

degree or diploma in any university and that to the best of knowledge it does

not contain any materials previously published or written by another person

except where due reference is made in the text.

______________________

Wayne Piekarski

Adelaide,

February 2004

______________________

Dr Bruce Thomas Ė Thesis Supervisor

Adelaide,

February 2004

Acknowledgements

A dissertation does not just appear out

of nowhere, and although it is supposed to be a contribution by one person for

a PhD, there are still a lot of people who have helped me out over the years. I

have been fortunate enough to have had the support of so many people and

without it this would not have been possible. While most people did not help

directly on the project, every one of them contributed in some way towards

helping me to get where I am today, even things like just being a friend and

going out and having fun. Others were responsible for giving me a push in the

right direction in life, and for everyone listed here I am eternally grateful

for their help.

Firstly there is the Wearable Computer

Lab crew. Although I initially started in the lab alone, over the years we have

grown to being a major lab at the university, and I have worked with a number

of people - Ben Close, Hannah Slay, Aaron Toney, Ben Avery, Ross Smith, Peter

Hutterer, Matthias Bauer, Pierre Malbezin, Barrie Mulley, Matthew Schultz,

Scott Sheridan, Leonard Teo, John Squires, John Donoghue, Phil DeBondi, and Dan

Makovec. Many of us have spent many countless late nights working on projects

to meet deadlines and the spirit of our team is truly awesome.

In the CIS department I also have a

number of friends apart from those in the WCL who I go to lunch with almost

every day, and I also enjoy spending time with them outside of work hours -

Grant Wigley, Greg Warner, Malcolm Bowes, Stewart Itzstein, and Toby Richer. I

would especially like to thank Grant for his friendship during the PhD program,

as we both started at the same time and have helped each other out

considerably. On behalf of the lunch crew I would also like to express

gratitude to the Brahma Lodge Hotel, for serving up copious amounts of the

finest cheesy potatoes on the planet to fuel our lunch time cravings.

In the CIS department I have worked with

three heads of school over the years and each have supported me in my

endeavours - Andy Koronios, Brenton Dansie, and David Kearney. With their

financial and leadership contributions I have been given the resources I need

to complete this PhD and also help to develop the WCL into what it is today.

Staff members in the CIS department have

also been extremely helpful. The numerous general staff performed the many

tasks that are required to keep the department running each day, and were

always very happy to help out when required. They helped to organise my

teaching trips, the ordering of equipment, and dealing with finances. Greg

Warner and Malcolm Bowes ran the department servers and allowed us to perform

unorthodox computer networking in the lab. Frank Fursenko and Tony Sobey also

discussed with me C++ and graphics programming on a number of occasions.

Millist Vincent assisted by proofreading parts of the thesis and provided

technical comments.

The DSTO Land Operations Division with

Victor Demczuk and Franco Principe were initially responsible for donating

various wearable computers and resources to the department. This was used to

start the initial projects in the WCL and would not have existed without them.

The Information Technology Division at DSTO has also been instrumental in

further research work we have done, giving us a number of large grants for

equipment as well as the design of our new backpack. I would especially like to

thank Rudi Vernik for his vision in granting this funding to the WCL and it has

helped to make our research first class work. The SES group with John Wilson,

Paul Zalkauskas, Chris Weckert, and Barry Crook, led by Peter Evdokiou, have to

be the finest and most professional group of engineers I have ever met.

Together they manufactured the Tinmith-Endeavour backpack design which dazzles

people at conferences all over the world.

When I was still growing up in 1993, I

had the fortune of using an Internet dial up service run by Mark Newton. He

introduced me to the one true operating system Unix, and all its amazing

features. I used his machine to learn how to use Unix and the fledgling

Internet, and through this I met a number of other people in the area. The late

Chris Wood took the time to teach me how to write Makefiles, use Emacs (the one

true editor), and how to correct bugs in my first X11 application. These

contributions helped to steer my professional development toward Unix

development which would come into much use at university. The Linux community

has also supported me by inviting me to speak at most of their conferences,

allowing me to teach audiences about my research work and to learn from others.

The developers who donated their time to the development of drivers for the

hardware I use have been most helpful, without these this project would not

have been possible.

When I was just starting at university I

was fortunate enough to meet Steve Baxter. He had just started up an Internet

company called SE Net along with his wife Emily Baxter and friend Chris Foote,

and asked me to work as the first employee of the company helping with sales

and support. As the company grew I was given the role of Manager of R&D,

and given the task of developing the systems that controlled the entire

company. Steve trusted in my abilities the future of his entire company. This

enabled me to gain a wealth of experience in leadership and design that would

never be given to most 18 year olds, and for this I am very grateful. Together

we built a company that led the field in a number of areas. As part of the team

at SE Net, I have many friends - Matt Altus, David Kuzmak, Richard and Genni

Kaye, Robert Gulley, Andrew Xenides, Rebecca Razzano, Lindsay Whitbread, Megan

Hehir, Mark Mills, and Scott Smith. Unfortunately SE Net has now been absorbed

by the new owners of the company, and so the fine traditions and spirit from SE

Net are no longer around, but exist in our memories forever.

During my travels overseas I have met a

number of great people who have been friendly and helpful. I would like to

especially thank the Computer Science department at the University of North Carolina at Chapel Hill for allowing me to spend three months performing research there.

Sally Stearns and Ray Thomas were kind enough to let me stay at their house for

the first few days while I found a place to stay. At UNC I made many friends

such as Mark Harris, Scott Cooper, Ken Hoff, Benjamin Lok, Samir Nayak, David

Marshburn, Andrew Nashel, and Stefan Sain, as well as the Pi Lambda Phi

fraternity and Drink Club crew.

There are also a number of other people

who do not work with me but have been friends for many years and I would also

like to thank them for their support - David Pridgeon, Trent Greenland, Ghassan

Abi Mosleh, Rebecca Brereton, Donna Harvey, Krishianthi Karunarathna, Josie

Brenko, Tam Nguyen, Sarah Bolderoff, and Derek Munneke.

The most instrumental person for this

dissertation was my supervisor Dr Bruce Thomas. I have worked with Bruce for

the last five years first as a final year undergraduate project student, and

then as a PhD student. Bruce had the insight to move into wearable computers

and augmented reality in the very early days and formed the Wearable Computer

Lab we have today. Bruce has helped me to gain an international profile in the

AR and wearables community, by generously giving me the funding to travel to

numerous places all over the world and to meet other researchers (and obtain a

frequent flyer gold card). This international development has strengthened my

PhD with experience from a wide range of people and further motivated my

research with fresh ideas. I would also like to thank Bruce for the countless

hours I have spent with him discussing research, proof reading papers and this

dissertation, talking about life in general, and having a beer as friends when

travelling.

To achieve a PhD at the University of South Australia, the dissertation must be reviewed by two external experts in the

area of research. I was fortunate enough to have Professor Steven Feiner from Columbia University and Associate Professor Mark Billinghurst from HIT Lab New Zealand as reviewers, who are both outstanding researchers in the international community.

Each of them carefully read through the hundreds of pages of this dissertation

and gave me excellent feedback which has been integrated into this final

version. I would like to thank both of them for their time and dedication to

reviewing my work and helping to improve it.

Most importantly of all, I would like to

thank my mum Kris, dad Spishek, brother Arron, and my grandparents for

supporting me for the last 25 years. My family also helped me build some of the

early backpack prototypes in the garage, making an important contribution to

the project. It is through their encouragement and care that I have made it

through all the steps to reach this point in life, and I couldnít have done it

without them. When my dad bought me a Commodore 64 when I was a little boy, who

would have thought I would have ended up here today? My family has always taken

care of me and I love them all very much.

In summary, I would like to thank

everyone for putting up with me for the last couple of years. I believe that

this dissertation has made a real contribution to the field of computer science

and I hope that everyone that reads this dissertation finds it useful in their

work. It has been a fun journey so far, and I look forward to catching up with

everyone and having lots of fun and good times, because that is the most

important thing of all.

Now it is time to catch up on some sleep

and have a holiday! (Well, not really - there is still plenty of other work to

do now)

Regards,

______________________

Wayne Piekarski

Adelaide,

February 2004

Inspiration

ďAnother noteworthy characteristic of

this manual is that it doesn't always tell the truth ... The author feels that

this technique of deliberate lying will actually make it easier for you to

learn the ideas. Once you understand the simple but false rule, it will not be

hard to supplement that rule with its exceptions.Ē

Donald Knuth, from the preface to The

TeXbook

ďIf you do choose to use a computer,

beware the temptation it offers to let manuscript preparation displace

composition. They are two separate activities, best done separately.

Hyphenation and exposition are at war with one another. Pagination vies with

content. The mind busy fretting over point size has no time left over to

consider clarity. If you need a break from the ardors of composition, try the

time-honored ones like poking the fire or baking bread. They smell good, and

they don't give you any illusion that your paper is making progress while you

indulge in them.Ē

Mary-Claire van Leunen

1

"We all agree that your theory is

crazy, but is it crazy enough?"

Niels Bohr (1885-1962)

Augmented reality (AR) is the

registration of projected computer-generated images over a userís view of the

physical world. With this extra information presented to the user, the physical

world can be enhanced or augmented beyond the userís normal experience. The

addition of information that is spatially located relative to the user can help

to improve their understanding of it. In 1965, Sutherland described his vision

for the Ultimate Display [SUTH65], with the goal of developing

systems that can generate artificial stimulus and give a human the impression

that the experience is actually real. Sutherland designed and built the first

optical head mounted display (HMD) that was used to project computer-generated

imagery over the physical world. This was the first example of an augmented

reality display [SUTH68]. Virtual reality (VR) was developed

later using opaque display technology to immerse the user into a fully

synthetic environment. One of the first integrated environments was by Fisher

et al., combining tracking of the head for VR with the use of tracked gloves as

an input device [FISH86].

Augmented reality and virtual reality

share common features in that they present computer-generated images for a user

to experience, with information anchored to 3D locations relative to the userís

display, their body, or the world [FEIN93b]. The physical world seen when

using AR can be thought of as a fourth kind of information that the user can

experience, similar to world-relative display but not artificially generated. A

typical example of a head mounted display is shown in Figure 1‑1, and an

example AR scene with both physical and virtual worlds is depicted in Figure 1‑2.

The schematic diagram in Figure 1‑3 depicts how a see-through HMD (used

to produce AR images for the user) can be conceptualised, and the next chapter

discusses in depth their implementation. While other forms of sensory

stimulation such as haptics and audio are also available to convey information

to the user, these will not be discussed since the focus of this dissertation

is HMD-based AR.

|

Figure 1‑1††††††† Example Sony Glasstron HMD with video camera and head

tracker

|

Although the first HMD was implemented in

1968 and performed augmented reality, the first main area of research with

these devices was for virtual reality. VR has a number of similar research

problems with AR, but does not rely on the physical world to provide images and

can also be viewed on display monitors or projectors. Since the user is

normally tethered to the VR system, they are not able to walk large distances

and virtual movement techniques such as flying are required to move beyond

these limits. These same problems restricted initial AR research because, by

its very nature, the user would like to walk around and explore the physical

world overlaid with AR information without being tethered to a fixed point.

While there are some important uses for AR in fixed locations, such as assisted

surgery with overlaid medical imagery [STAT96] or assistance with assembly tasks [CURT98], the ability to move around freely

is important. When discussing the challenges of working outdoors Azuma states

that the ultimate goal of AR research is to develop systems that ďcan operate

anywhere, in any environmentĒ [AZUM97b].

A pioneering piece of work in mobile

augmented reality was the Touring Machine [FEIN97], the first example of a mobile

outdoor AR system. Using technology that was small and light enough to be worn,

a whole new area of mobile AR research (both indoor and outdoor) was created. While

many research problems are similar to indoor VR, there are unsolved domain

specific problems that prevent mainstream AR usage. Older survey papers (such

as by Azuma [AZUM97a]) cover many technological problems

such as tracking and registration. As this technology has improved, newer

research is focusing on higher-level problems such as user interfaces, as

discussed by Azuma et al. [AZUM01].

|

Figure 1‑2††††††† Example of outdoor augmented reality with

computer-generated furniture

Figure 1‑3††††††† Schematic of augmented reality implementation using a HMD

|

Many outdoor AR systems produced to date

rely only on the position and orientation of the userís head in the (sometimes

limited) physical world as the user interface, with user interfaces that can

indirectly adjust the virtual environment. Without a rich user interface

capable of interacting with the virtual environment directly, AR systems are

limited to changing only simple attributes, and rely on another computer

(usually an indoor desktop machine) to actually create and edit 3D models. To

date, no one has produced an outdoor AR system with an interface that allows

the user to leave behind all their fixed equipment and independently perform 3D

modelling in real-time. This dissertation explores user interface issues for

AR, and makes a number of contributions toward making AR systems able to

operate independently in the future, particularly for use in outdoor

environments.

The development of user interfaces for

mobile outdoor AR systems is currently an area with many unsolved problems. When

technology is available in the future that solves existing registration and

tracking problems, having powerful applications that can take advantage of this

technology will be important. Azuma et al. state that ďwe need a better

understanding of how to display data to a user and how the user should interact

with the dataĒ [AZUM01]. In his discussions of virtual reality

technology, Brooks stated that input devices and techniques that substitute for

real interactions were still an unsolved problem and important for interfacing

with users [BROO97]. While VR is a different

environment in that the user is fully immersed and usually restricted in

motion, the 3D interaction problems Brooks discusses are similar and still

relevant to the AR domain. Working in an outdoor environment also imposes more

restrictions due to its mobile nature, and increases the number of problems to overcome.

On desktop computers, the ubiquitous WIMP

interface (windows, icons, menus, and pointer - as pioneered by systems such as

the Xerox Star [JOHN89]) is the de-facto standard user

interface that has been refined over many years. Since mobile outdoor AR is a

unique operating environment, many existing input methodologies developed for

AR/VR and desktop user interfaces are unavailable or unsuitable for use. In an

early paper about 3D modelling on a desktop, Liang and Green stated that

mouse-based interactions are bottlenecks to designing in 3D because users are

forced to decompose 3D tasks into separate 1D or 2D components [LIAN93]. Another problem is that most

desktop 2D input devices require surfaces to operate on, and these are

unavailable when walking outdoors. Rather than trying to leverage WIMP-based

user interfaces, new 3D interfaces should be designed that take full advantage

of the environment and devices available to the user. An advantage of AR and VR

is that the userís body can be used to control the view point very intuitively,

although no de-facto standard has emerged for other controls in these

applications. While research has been performed in the VR area to address this,

many techniques developed are intended for use in immersive environments (which

do not have physical world overlay requirements) and with fixed and limiting

infrastructure (preventing portability).

With AR systems today (and with virtual

environments in general), a current problem is the supply of 3D models for the

computer to render and overlay on the physical world. Brooks mentions that 3D

database construction and modelling is one of four technologies that are

crucial for VR to become mainstream, and is still an unsolved problem [BROO97]. He mentions that there is promising

work in the area of image-based reconstruction, but currently modelling is

performed using CAD by-products or hard work. An interesting problem area to

explore is what types of models can be created or captured directly while

moving around outdoors. By integrating the modelling process and user

interface, the user is able to control the modelling process directly and take

advantage of their extensive knowledge of the environment.

1.1.1 Research questions

For this dissertation I have investigated

a number of different unsolved problems in the field of AR, and then combined

the solutions developed to produce real world applications as demonstrations. I

have formulated these different problems into research questions that will be

addressed in this dissertation:

∑ How can a user intuitively control and interact with a mobile AR

system outdoors, without hampering their mobility or encumbering their hands?

How can

a user perform manipulation tasks (such as translate, rotate, and scale) with

an outdoor mobile AR system of existing 3D geometry, in many cases out of armís

reach and at scales larger than the userís body?

How can

a user capture 3D geometry representing objects that exist in the physical

world, or create new 3D geometry of objects that the user can preview alongside

physical world objects, out of armís reach and at scales larger than the userís

body?

What is

an appropriate software architecture to develop this system with, to operate

using a wide variety of hardware and software components, and to simplify

application development?

What

hardware must be developed and integrated into a wearable platform so that the

user can perform AR in the physical world outdoors?

What

application domains can take advantage of the novel ideas presented in this

dissertation, and for what real world uses can they be applied to?

1.1.2 Research goals

The main goal of this dissertation is to

answer the questions discussed previously and contribute solutions towards the

problems facing augmented reality today, predominantly in the area of user

interfaces for mobile outdoor systems. The specific research goals of this

dissertation are as follows:

∑ Mobile user interface - The user

interface should not unnecessarily restrict the userís mobility, and should use

intuitive and natural controls that are simple to learn and use. Requiring the

user to carry and manipulate physical props as controls may interfere with the

userís ability to perform a required task, such as holding a tool.

Real-time

modelling enhanced with proprioception - The user

should be able to interactively create and capture the geometry of buildings

using the presence of their body. Using solid modelling operations and the

current position and orientation of the head and hands will make this process

intuitive for the user.

Mobile

augmented reality - Outdoor augmented reality

requires a mobile computer, a head mounted display, and tracking of the body.

These technologies currently suffer from a number of limitations and

applications must be designed with this in mind to be realisable. By using

current technology, new ideas can be tested immediately rather than waiting for

future technology that may not appear in the short term.

Using parts of the body such as the head

and hands to perform gestures is a natural way of interacting with 3D

environments, as humans are used to performing these actions when explaining

operations to others and dealing with the physical world. By using techniques

such as pointing and grabbing with objects in positions relative to the body,

user interfaces can leverage proprioception, the userís inbuilt knowledge as to

what their body is doing. Mine et al. [MINE97a] demonstrated that designing user

interfaces to take advantage of a humanís proprioceptive capabilities produced

improved results. Using an input device such as a mouse introduces extra levels

of abstraction for the direct manipulation metaphor (as discussed by Johnson et

al. [JOHN89]), and so using the head and hands

allows more intuitive controls for view point specification and object

manipulation.

Trying to leverage existing 2D input

devices for use in a naturally 3D environment is the wrong approach to this

problem, and designing proper 3D user interfaces that directly map the user to

the problem (as well as taking advantage of existing interactive 3D research)

will yield improved results. The use of 3D input devices has been demonstrated

to improve design and modelling performance compared to

2D desktop systems that force the user to break down 3D problems into separate

and unnatural 2D operations [CLAR76] [SACH91] [BUTT92] [LIAN93].

In VR environments the user is able to

use combinations of physical movement and virtual flying operations to move

about. In contrast, in AR environments the user is required to always move with

their physical body otherwise registration between the physical and artificial

worlds will be broken. Using direct manipulation

techniques, interacting with objects that are too large or too far away is not

possible. Desktop-based CAD systems rely on 2D inputs but can perform 3D

operations through the use of a concept named working planes. By projecting a

2D input device cursor onto a working plane the full 3D coordinates of it can be

calculated unambiguously. By extending the concept of working planes to

augmented reality, both the creation of geometry and interaction with objects

at a distance can be achieved. These working planes can be created using the

physical presence of the body or made relative to other objects in the

environment. Accurate estimation of the depth of objects at large distances

away has been shown to be difficult for humans [CUTT95], and AR working planes provides

accurate specification of depth. This functionality is achieved using a

slightly increased number of interaction steps and reduction in available

degrees of freedom.

By combining AR working planes with

various primitive 3D objects (such as planes and cubes) and traditional

constructive solid geometry techniques (such as carving and joining objects),

powerful modelling operations can be realised, which I have termed construction

at a distance. These operations give users the capability to capture 3D models

of existing outdoor structures (supplementing existing surveying techniques),

create new models for preview that do not currently exist, and perform editing

operations to see what effect changes have on the environment. By taking

advantage of a fully tracked AR system outdoors, and leveraging the presence of

the userís body, interactive modelling can be supported in an intuitive

fashion, streamlining the process for many types of real world applications.

This dissertation makes a number of

research contributions to the current state of the art in augmented reality and

user interfaces. Some of the initial contributions in this dissertation also

require a number of supporting hardware and software artefacts to be designed

and developed, each with their own separate contributions. The full list of

contributions is:

∑ The analysis of current techniques for distance estimation and

action at a distance, and the formulation of a technique named augmented

reality working planes. This new technique can create objects accurately at

large distances through the use of line of sight techniques and the projection

of 2D cursors against planes. This technique is usable in any kind of virtual

environment and is not limited to augmented reality. [PIEK03c]

The

design and implementation of a series of techniques I term construction at a

distance, which allow users to capture the 3D geometry of existing outdoor

structures, as well as create 3D geometry for non-existent structures. This

technique is based on AR and uses the physical presence of the user to control

the modelling. Objects can be modelled that are at scales much larger than the

user, and out of armís reach. [PIEK03c]

The

iterative design and development of an augmented reality user interface for

pointing and command entry, allowing a user wearing gloves to navigate through

and select menu options using finger presses, without requiring high fidelity

tracking that is unavailable outdoors. This user interface can operate without

tracking, but when tracking is available it allows interaction at a distance

with 3D environments. [PIEK03d]

The

development of a vision-based hand tracking system using custom designed pinch

gloves and existing fiducial marker tracking software that can work reliably

under outdoor wearable conditions. [PIEK02f]

The

development of applications that allow users to model buildings with the

techniques described in this dissertation, and in some cases being able to

model objects previously not possible with or faster than existing surveying

techniques. [PIEK01b] [PIEK03c]

The

design and implementation of a software architecture that is capable of

supporting the research for this dissertation, culminating in the latest design

of the Tinmith software. Current software architectures are still immature and

do not support all the requirements for this dissertation, and so this

architecture was designed to support these requirements and implements many

novel solutions to various problems encountered during the design. [PIEK01c] [PIEK02a] [PIEK03f]

The

iterative design and development of hardware required to demonstrate the

applications running outdoors. Wearable computing is currently in its infancy

and so the devices that are required to be used outdoors are not necessarily

designed for this, and numerous problems have been encountered for which novel

solutions to these are implemented. [PIEK02h]

After this introduction chapter, chapter

two contains an overall background discussion introducing the concepts and

technology that form a core of this dissertation. This overview provides a

general discussion of related research, and each chapter then includes other

more specific background information when relevant. Chapter three discusses the

problems associated with a user interacting with objects at large distances Ė

while most VR systems take advantage of a humanís ability to work well within

armís reach, outdoor AR work tends to be performed away from the body where

depth perception attenuates very rapidly. The novel idea of using the concept

of CAD working planes for AR is introduced, along with various techniques that

can be employed to perform interaction at a wide range of scales and distances

beyond the reach of the user. Chapter four takes the concepts previously

developed in the dissertation as well as constructive solid geometry and

develops a new series of techniques named construction at a distance that allow

users to perform modelling of outdoor objects using a mobile AR system. These

techniques are then demonstrated using a series of examples of outdoor objects

to show their usefulness. Chapter five explains how the previously developed

techniques are interfaced to the user through pointing with the hands, command

entry using the fingers, and the display of data to the HMD. The user interface

is required to develop applications that are useable tools - an important part

of this dissertation is the ability to test out the techniques and improve them

iteratively. Chapter six introduces the software architecture used to

facilitate the development of virtual environment applications. The software

architecture contains a number of novel features that simplify the programming

of these applications, unifying all the components with a consistent design

based on object-oriented programming, data flow, and a Unix file system-based object

repository. By tightly integrating components normally implemented separately,

such as the scene graph, user interface, and internal data handling,

capabilities that are normally difficult to program can be handled with

relative ease. To complete the research, chapter seven describes the hardware

components that are an important part of the overall implementation since the

software relies on these to execute. The research and development of the mobile

backpack, user input glove, and vision tracking system are explained to show

some of the important new innovations that have been developed in these areas

for use outdoors. After concluding with a discussion of the numerous

contributions made and future work, this dissertation contains an appendix with

a history of my previous hardware and software implementations, as well as

links to where further information can be found about the project.

2

"If you want to make an apple pie

from scratch, you must first create the universe."

Carl Sagan

This chapter contains a summary of the

state of the art in augmented reality research and related technologies that

are relevant to this dissertation. Some of this information has been developed

during the period of my research and is included so comparisons can be made.

First, an extended (from chapter 1) description and definition of augmented

reality is presented, followed by a discussion of how it fits into the spectrum

of virtual environments. The chapter then goes on to discuss various indoor and

outdoor AR applications that have been developed, demonstrating the current

state of the art. Following this is a discussion of the two techniques for

performing real-time AR overlay, as well as a summary of the numerous types of

tracking technologies employed. After these technologies have been explained, a

history of human computer interaction techniques for desktop and virtual

reality systems is then covered. Current techniques used for the capture of

models in the physical world are then discussed, followed by a section

summarising commercially available CAD software and solid modelling techniques.

Finally, the problems of working outdoors with wearable computers are

described, including how they can be used for mobile augmented reality.

When Sutherland proposed the concept of

the Ultimate Display [SUTH65], his goal was to generate

artificial stimulus that would give the user the impression that the experience

is real. Instead of immersing the user into an artificial reality, a second

approach is to augment the userís senses with extra information, letting them

experience both artificial and real stimulus simultaneously. In his excellent

survey paper of the field, Azuma defines augmented reality systems as those

that contain the following three characteristics [AZUM97a]:

∑ Combines real and virtual

Interactive

in real-time

Registered

in 3D

This definition does not limit augmented

reality to the use of head mounted displays (allowing for monitors, projectors,

and shutter glasses), but excludes non-interactive media such as movies and

television shows. This dissertation focuses on mobile outdoor augmented

reality, and therefore this chapter will focus only on research related to head

mounted displays.

|

Figure 2‑1††††††† Example of Milgram and Kishinoís reality-virtuality

continuum

(Adapted

from [MILG94])

|

With the availability of real-time

computer-generated 3D graphics, computers can render synthetic environments on

a display device that can give the user the impression they are immersed within

a virtual world. This technology is referred to as virtual reality (VR) and is

designed to simulate with a computer the physical world humans normally can

see. The opposite of VR is the real physical world typically experienced by a

human, although it may be slightly attenuated because it is being viewed via a

head mounted display or video camera. Augmented reality is therefore made up of

a combination of virtual and real environments, although the exact make up of

this may vary significantly. Milgram and Kishino used these properties to

define a reality-virtuality continuum [MILG94], and this can be used to perform

comparisons between various forms of mixed reality by placement onto a

spectrum. At one end of the continuum is the physical world, the other end is

fully synthetic virtual environments, and AR is located somewhere in between

since it is a combination of the two. Figure 2‑1 is adapted from Milgram

and Kishinoís continuum, with example pictures at different locations on the

reality-virtuality spectrum but showing the view from the same location. The

first image in Figure 2‑1 shows a view of the physical world seen through

a head mounted display, with no virtual information at all. The next image is augmented

reality, where artificial objects (such as the table) are added to the physical

world. The third image is augmented virtuality, where physical world objects

(such as a live display of the userís view of the world) are added into a fully

immersive virtual environment. The final image depicts a completely synthetic

environment, with no information from the physical world being presented. Every

type of 3D environment can be placed somewhere along this spectrum and can be

used to easily compare and contrast their properties.

To overlay 3D models on to the userís

view, a mobile AR system requires a HMD to be combined with a device that can

measure the position and orientation of the userís head. As the user moves

through the physical world the display is updated by the computer in real-time.

The accuracy of the virtual objects registered to the physical world influences

the realism of the fusion that the user experiences. A major focus of current

AR research has been achieving good registration, as discussed extensively in

survey papers by Azuma [AZUM97a] and Azuma et al. [AZUM01]. There are a number of known

problems that cause poor registration, such as tracker inaccuracies, HMD

misalignment, and delays in the various stages of rendering from the trackers

to the display.

While registration is important for

producing AR applications that are realistic (giving the user a sense of

presence and hence being more immersive and easier to use) it is not the only

important issue in AR research. Other questions, such as how do users interface

with these systems, and what kind of tasks can systems perform, are also

important and make the registration research useable for building real world applications.

During the evolution of technologies such

as virtual reality and augmented reality, there have been a number of

applications developed that demonstrate the use of this technology. In the

field of augmented reality, this research work initially began indoors where

hardware is able to be large and consume considerable electrical power without

imposing too many restrictions on its use. As hardware has become smaller in

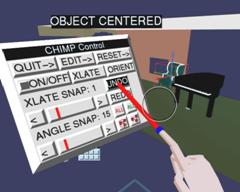

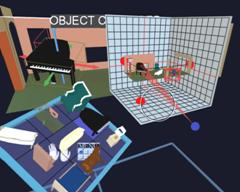

size and more powerful, researchers are demonstrating more complex systems and